Introduction

With the increased adoption of Kubernetes, I spent the past year getting certified by the Linux Foundation as a Certified Kubernetes Application Developer and Certified Kubernetes Administrator. One of the biggest obstacles on this journey was obtaining a cost-effective, test-like sandbox in which to learn and make mistakes. To solve this issue, I automated a process to create my sandbox Kubernetes cluster, and this post is a step by step guide for you to create your own.

Local Workstation Prerequisites

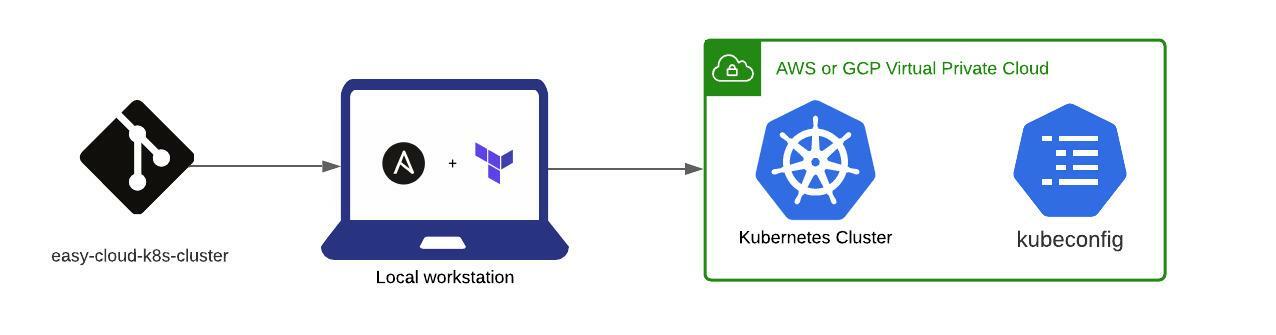

As indicated by the above diagram, you need a workstation that will provision your Kubernetes cluster into either AWS or GCP. This workstation will need the following software installed:

Operating System: Any operating system that supports Python 3.6 or higher will suffice. While I ran this using macOS Big Sur, and RHEL8, you can also use Debian, CentOS, macOS, any of the BSDs. Ansible will NOT run on Windows and therefore it is not included in this list.

git: You’ll need git in order to clone the

easy-cloud-k8s-clusterrepository required for Kubernetes cluster creation.kubectl: The kubectl command line tool lets you control Kubernetes clusters from your local workstation. For configuration, kubectl looks for a file named

configin the$HOME/.kubedirectory.Ansible Engine 2.9: Required to run the provision/bootstrap/teardown playbooks. The latest minor release of ansible engine 2.9 can be determined by going to the releases page (2.9.24 as of this writing); and you can install ansible via pip3:

$ pip3 install ansible=="2.9.x"Terraform: Terraform is available as an executable binary, which can be downloaded from here. While the latest version as of this writing is 1.0.3, I am using 1.0.1 for this tutorial. Hashicorp maintains a releases page in case you wish to download an older version of Terraform.

Python Packages

The Kubernetes Ansible modules require the

openshiftandPyYAMLpackages.$ pip3 install openshift PyYAMLGoogle Cloud Platform:

If you want your k8s cluster to be on GCP, the

requestsand thegoogle-authlibraries must be installed:$ pip3 install requests google-authAlternatively for RHEL / CentOS, the python-requests package is also available to satisfy requests libraries.

$ yum install python-requests

Amazon Web Services:

If you want your k8s cluster to be on AWS, you must have the

botocoreandboto3libraries installed:$ pip3 install boto3 botocore

Setting up your environment

- AWS:

- A guide to generating authentication credentials from AWS are here. Detailed instructions with pictures can also be found in my blog entry on Blogging-as-a-Service, here.

- The playbook will look for the file at

$HOME/.aws/credentials, but you can change this value ineasy-cloud-k8s-cluster/vars/default-vars.yml.

- GCP:

- Google Cloud Platform allows you to create a Service Account that you can then use to manage cloud resources

- Instructions to create the Service Account can be found here

- After creating the Service Account, download the JSON file containing the Service Account credentials by following Google’s instructions.

- The playbook will look for the file at

/Users/<user>/.gcp/gcp-creds.json, but you can change this value ineasy-cloud-k8s-cluster/vars/default-vars.yml.

- Google Cloud Platform allows you to create a Service Account that you can then use to manage cloud resources

Kubernetes Cluster Provisioning Process

1: Local Setup of Repository and Files

The first order of business it to clone the public github repository which hosts the Ansible playbooks needed to provision/boostrap your Kubernetes cluster:

$ git clone https://github.com/michaelford85/easy-cloud-k8s-cluster.git

This will clone the repository locally into your present working directory.

2: Download collections and roles

easy-cloud-k8s-cluster directory in your terminal, then run the following two commands:

$ ansible-galaxy collection install -r requirements.yml

$ ansible-galaxy role install -r requirements.yml

You can see the specific collections and roles downloaded at easy-cloud-k8s-cluster/requirements.yml:

The roles include ansible playbooks to install docker, pip and Kubernetes components on the newly provisioned cloud instances. The collections include modules to manage AWS, GCP, Kubernetes, and some general functions.

3: Setting your Kubernetes cluster parameters

Before running the Kubernetes provisioner, you’ll need to set some parameters in easy-kubeadm-k8s-cluster/vars/default-vars.yml. An example of the default variables being set up for provisioning a 4-worker cluster in AWS with Kubernetes version 1.21 is shown here:

4: Running the provisioner

After setting up your parameters, provision your instances with a playbook run:

$ ansible-playbook provision-kubeadm-cluster.yml

This playbook will provision your prescribed instances in your cloud provider, and copy the public/private SSH keys to your working_dir (/tmp by default).

5: Confirm cloud inventory

You can confirm the existence of these instances without even having to log into your cloud provider’s web console, by running the ansible-inventory command, and specifying the appropriate dynamic inventory source (found in the repository):

- AWS:

k8s.aws_ec2.yml - GCP:

k8s.gcp.yml

An example of the command and output is here:

6: Bootstrap the cluster

Now that the instances are provisioned, you can install Kubernetes. The bootstrap-kubeadm-cluster.yml playbook will:

- Install the pip3 package management system on all nodes

- Install the docker container runtime on all nodes

- Install the appropriate Kubernetes components on all nodes

- Copy the kubeconfig file from the master node to your local workstation

Again, specify the appropriate dynamic inventory script based on the cloud provider you are using. This is the command based in the AWS-based cluster in this tutorial:

$ sudo ansible-playbook bootstrap-kubeadm-cluster.yml -i k8s.aws_ec2.yml

The bootstrap process will take 2-4 minutes to complete.

7: Set the KUBECONFIG environment variable

In order to access your Kubernetes cluster via the command line, you can set the KUBECONFIG environment variable to point to your newly created /{{ working_dir }}/{{ cloud_prefix }}-config. Using the values from our example default_vars.yml file:

$ export KUBECONFIG=/tmp/mford-cluster-config

Now you can confirm both ready status of and authentication to the cluster using kubectl:

Deploy your first pod

To confirm the cluster is running properly, you can deploy an nginx POD and NodePort service, the contents of which can be found in the repository at nginx-pod.yml:

As this manifest file already exists, run the following command to deploy the POD and service:

$ kubectl create -f nginx-pod.yml

You can confirm the POD and Service are running with a kubectl -n default get all command:

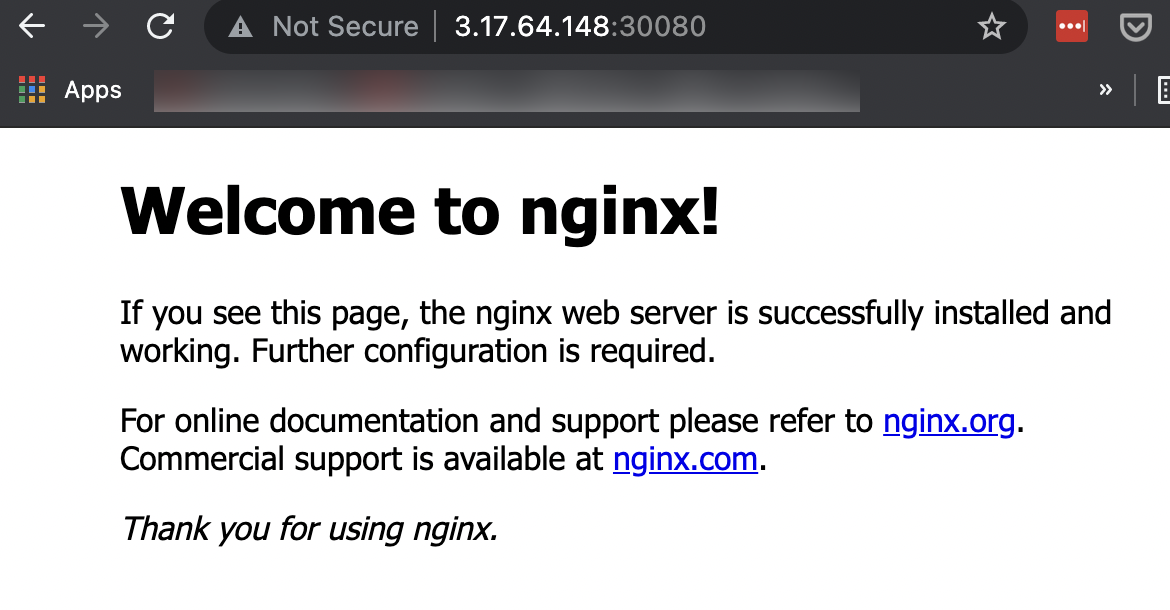

The nginx webserver POD is running on only one of your Kubernetes worker nodes, accessible on TCP port 30080. In the spirit of automation, there is a playbook in the repository called nginx-public-ip-address.yml that will print out the URL required to access nginx in your browser:

Opening this URL in your browser will take you to the default nginx page:

Congratulations - you can now use this cluster for your Linux Foundation Exam studies!

Teardown the cluster

After you’re done with your study session, tearing down your cluster takes a single playbook command:

$ ansible-playbook teardown-cluster.yml

This process should take no more than 2 minutes.

Conclusion

Using this repository, you can set up a Kubernetes environment that matches the one you will encounter in the Linux Foundation Kubernetes exams, with minimal effort and cost. I hope this helps you get past one of the biggest hurdles to learning Kubernetes - having a place to practice!